Microsoft has bought twice as lots of Nvidia’s flagship chips as any of its greatest opponents in the USA and China this yr, as OpenAI’s greatest investor has accelerated its funding in infrastructure. synthetic intelligence.

Analysts at Omdia, a know-how consultancy, estimate that Microsoft has bought 485,000 “Hopper” chips from Nvidia this yr. That places Microsoft far forward of Nvidia’s second-largest U.S. buyer, Meta, which has bought 224,000 Hopper chips, in addition to cloud computing rivals Amazon and Google.

Whereas demand has outpaced provide of Nvidia’s most superior graphics processing models for a lot of the previous two years, Microsoft’s chip stock has given it an edge within the race to construct the subsequent era of AI programs.

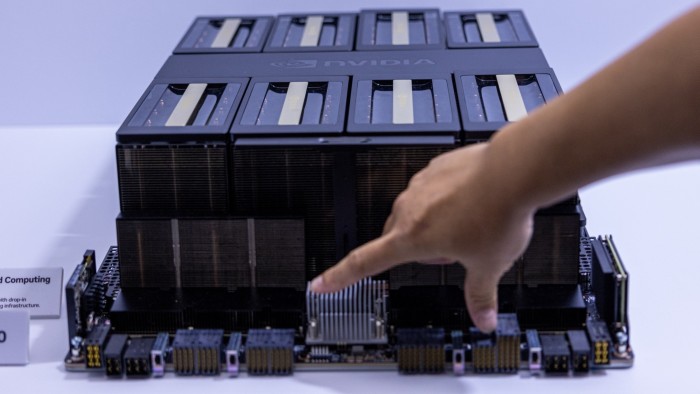

This yr, huge tech corporations have spent tens of billions of {dollars} on information facilities working Nvidia’s newest chips, which have turn out to be Silicon Valley’s hottest product since ChatGPT’s debut two years in the past. which triggered an unprecedented wave of funding in AI.

Microsoft’s Azure cloud infrastructure was used to coach OpenAI customers. last o1 mannequin, as they race in opposition to a resurgent Google, start-ups comparable to Anthropic and Elon Musk’s xAI, and rivals in China for dominance within the subsequent era of computing.

Omdia estimates that ByteDance and Tencent every ordered about 230,000 Nvidia chips this yr, together with the H20 mannequin, a much less highly effective model of Hopper that was modified to satisfy U.S. export controls for Chinese language clients.

Amazon and Google, which together with Meta are accelerating the deployment of their very own customized AI chips as a substitute for Nvidia’s, purchased 196,000 and 169,000 Hopper chips, respectively, analysts mentioned.

Omdia analyzes firm capital expenditures, server shipments, and provide chain info launched by corporations to calculate its estimates.

The worth of Nvidiawhich is now starting to roll out Hopper’s successor, Blackwell, has soared to greater than $3 trillion this yr as huge tech corporations race to assemble bigger and bigger clusters of its GPUs.

Nevertheless, the inventory’s extraordinary rise has eased in current months amid considerations over slower growthcompetitors from customized AI chips from huge tech corporations and the potential disruption of their operations in China by the brand new Donald Trump administration in the USA.

ByteDance and Tencent have turn out to be two of Nvidia’s greatest clients this yr, regardless of U.S. authorities restrictions on the capabilities of American AI chips that may be bought in China.

Microsoft, which has invested $13 billion in OpenAI, has been essentially the most aggressive of the large U.S. tech corporations in constructing information heart infrastructure, each to run its personal AI companies comparable to its Copilot assistant and to lease them to its clients by way of its Azure division. .

Microsoft’s Nvidia chip orders are greater than triple the variety of Nvidia’s same-generation AI processors bought in 2023, when Nvidia was scrambling to increase. hopper production following the resounding success of ChatGPT.

“Good information heart infrastructure is a really advanced and capital-intensive venture,” Alistair Speirs, senior director of Azure International Infrastructure at Microsoft, instructed the Monetary Occasions. “It takes a number of years of planning. It’s subsequently vital to foretell the place our development will likely be with just a little margin.

Tech corporations around the globe will spend about $229 billion on servers in 2024, in keeping with Omdia, led by Microsoft’s $31 billion in investments and Amazon’s $26 billion. The highest 10 consumers of knowledge heart infrastructure – which now embrace newcomers xAI and CoreWeave – account for 60% of world funding in computing energy.

Vlad Galabov, director of cloud and information heart analysis at Omdia, mentioned about 43% of server spending went to Nvidia in 2024.

“Nvidia GPUs had been a particularly excessive portion of server capex,” he mentioned. “We’re near the highest.”

Whereas Nvidia nonetheless dominates the AI chip market, its Silicon Valley rival AMD has been make breakthroughs. Meta bought 173,000 MI300 chips from AMD this yr, whereas Microsoft bought 96,000, in keeping with Omdia.

Massive tech corporations have additionally ramped up using their very own AI chips this yr, as they attempt to cut back their reliance on Nvidia. Google, which has been creating its “tensor processing models,” or TPUs, for a decade, and Meta, which launched the primary era of its Meta Coaching and Inference Accelerator chip final yr, have every deployed about 1.5 million of their very own chips.

Amazon, which is investing closely in its Trainium and Inferentia chips for its cloud computing clients, has deployed about 1.3 million of the chips this yr. Amazon this month introduced plans to construct a brand new cluster utilizing lots of of hundreds of its newest Trainium chips for Anthropic, an OpenAI rival during which Amazon has invested $8 billion, to coach the subsequent era of its AI fashions.

Microsoft, nonetheless, is way earlier in its efforts to construct an AI accelerator able to rivaling Nvidia’s, with solely about 200,000 of its Maia chips put in this yr.

Speirs mentioned utilizing Nvidia’s chips nonetheless required Microsoft to make important investments in its personal know-how to supply a “distinctive” service to clients.

“In our expertise, constructing AI infrastructure is not only about having the most effective chip, it is also about having the correct storage parts, the correct infrastructure, the correct software program layer, the correct layer host administration, error correction and all these different components. parts to construct this method,” he mentioned.

#Microsoft #acquires #Nvidia #chips #tech #opponents , #Gossip247

,

ketchum

elon musk web value

david bonderman

adobe inventory

nationwide grid

microsoft ai